Edge computing is exploding right now and we are not just talking about hardware to support a retail system or manufacturing plant. The AI and IOT revolution is upon us.

It is hard to go half a day without hearing about some new AI model that is going to revolutionise the way we do "x", or that a bunch of clever folks have invented the next device thats going to save the world, improve safety or even just make our lives easier.

Internet of things (IOT) devices themselves alone are predicted to balloon to around 29 billion by 2030, that's double in a decade... (approx ~20 billion in 2020). As as we put more of these devices into every aspect of our lives from smart cities to autonomous vehicles we can expect the amount of data to explode along with it.

Even with new high speed networking options in urban environments that doesn't work everywhere, i.e remote. The volume of data we are already generating today with these devices is already too large in some cases to push across networks in a timely or cost-effective manner. Because of this, we are seeing processing being completed at the edge, or, is being retained for longer periods until such time the "device" comes back into a depot and can be offloaded.

Then there is performance - with the explosion of data comes the performance component so edge storage must be able to keep up, not only with the peak performance requirements of sensors and devices but also the durability. Depending on the application and use case we are seeing 10's if not 100's of TB's being written and read every day.

Let's take a look at a some metrics across a couple of verticals that Solidigm presented to put this all into context.

- Smart vehicles (self-driving for example) are generating 1.5TB to 19TB ... per hour depending on the sensor density. Which equates to petabytes per year written..

- Smart agriculture is in on this too, John Deer have smart spraying machines "see and spray" which take around 20 crop images per second across it's 36 cameras. Generating a whopping 6TB/day and needs 10 cpus to perform the processing.

Obviously these are just two examples and probably more in the extreme end (today) but this is only going to become more common.

Solidigm QLC SSD

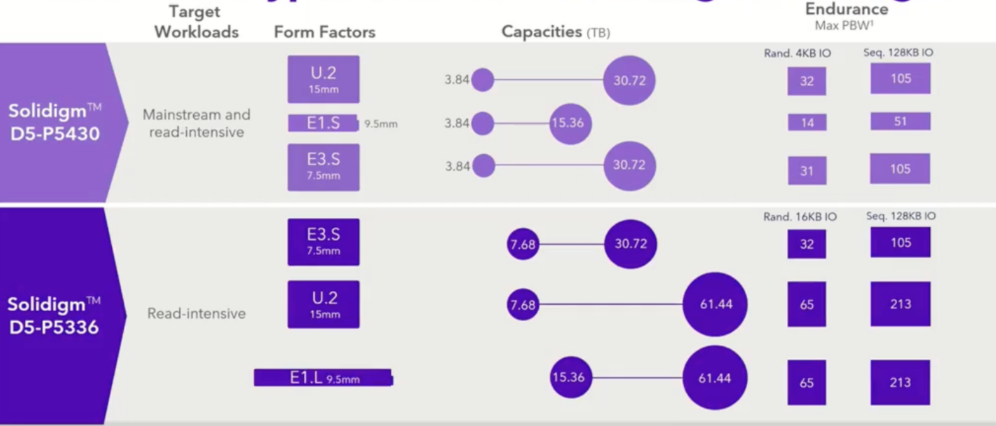

Solidigm have an impressive portfolio of battle tested QLC drives that come in a number of form factors and sizes as per the diagram below.

Impressively they have industry leading 61.44 terabyte capacity available today which means for ultra dense requirements you can fit 2 petabytes into a 1RU footprint!

Not only do they have market leading range of form factors and sizes but they have the performance stats to back it all up. For data intensive workloads the D5-P5336 dominated in performance and endurance scoring.

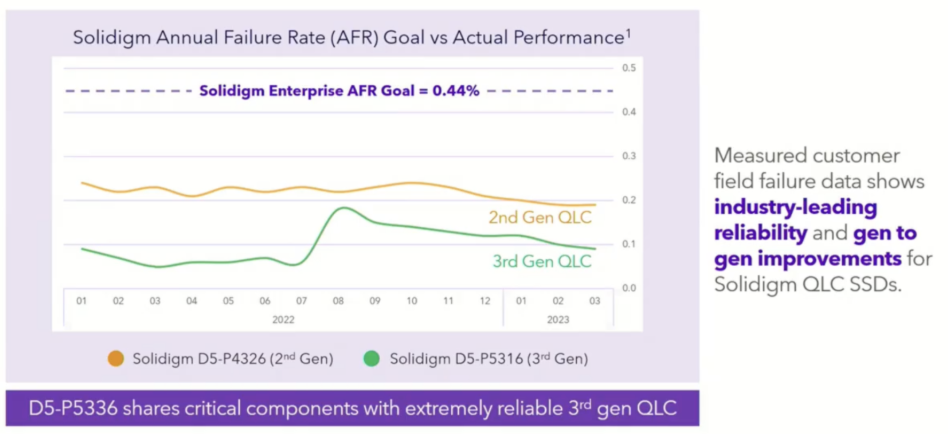

And what about reliability? Well they have some of the best failure rate figures in the market today and are consistently sitting well below their annual failure rate as well as each generation of the product getting better and better.

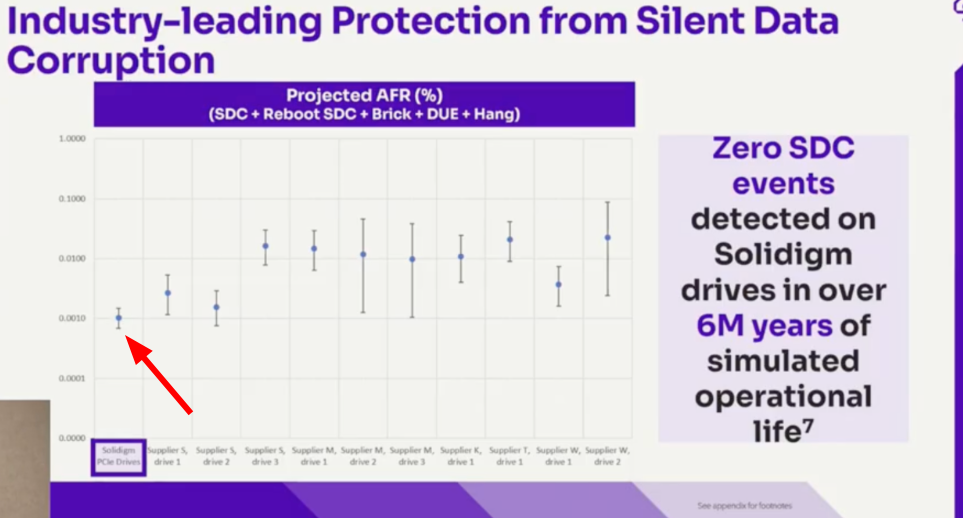

On top of a very low failure rate - their products have very low silent data corruption figures compared with other players in this market when a lab test of 6M years of operational life was carried out.

This is thanks to their overall design and firmware.

What do others think about the Solidigm drives?

For those who often do not believe glossy marketing slides or brochures from suppliers stating their impressive performance and durability numbers, ive got you! Well, Jordan from StorageReview.com does anyway....

He beat up the drives in an independent review, so much so he is quoted as saying "QLC is here, and its ready" when talking about the datacenter, not just edge applications.

His test's tortured the drives for 54 days straight running computational calculations of Pi out to 100 trillion digits. And beating a world record along the way..

The lab contained 19 x Solidigm 30.72TB QLC D5-P5316 SSDs

The tests equated to around 29TB on average write to each drive on average, the wear rate was so low after the test had completed that you could continue the test for almost a decade before it would run out of life... well past the 5 year warranty of the QLC drives themselves.

Conclusion

Solidigm's drives have an industry leading range of devices backed by performance, durability numbers that just make sense, especially in edge environments that have a heavy storage requirement, either total volume or amount of data generated, it doesnt matter! They have a solution for it all.

It doesn't stop there though I believe they have a proven track record with years in the industry that these drives would not be out of place in ANY data intensive application, be that at the edge or in the datacenter.